CNN Says Cut Red Meat to Protect Your Brain. Here’s Why They’re Wrong.

Jan 20, 2025

Last week CNN published a piece entitled “Keep your red meat to these limits to protect your brain health, experts say”. The piece argues that we should limit our intake of red meat and processed meat to three servings per week or less in order to reduce our chances of developing Alzheimer’s disease or other forms of dementia.

Now whenever you come across this kind of headline you should first click through to the academic paper. Many news sites don’t even provide a link to the paper, but to CNN’s credit, they did this time.

When you do click through to the paper you’ll see that the main data point cited is from the Nurses Health Study (NHS) and from a follow up survey, the Health Professionals Follow-up Study (HPFS). The first thing to say about these studies is that they are low-quality evidence.

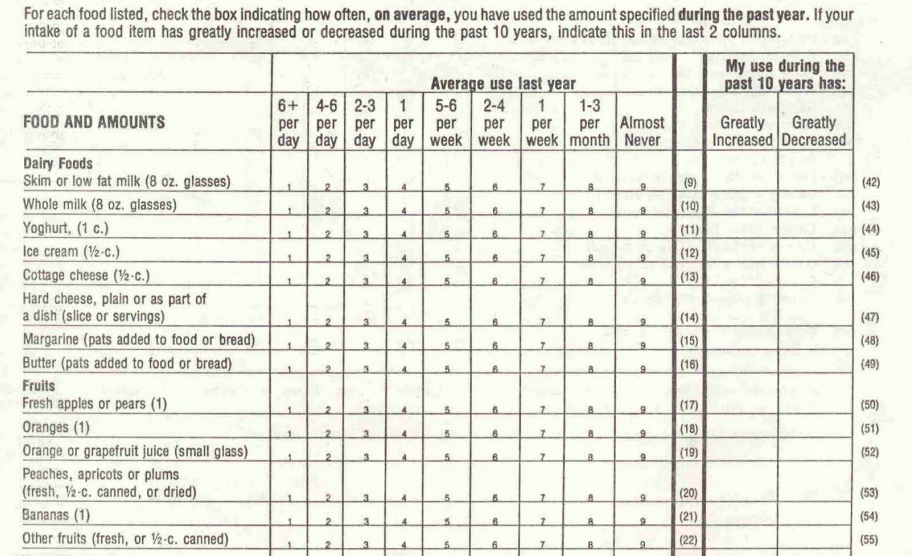

In the graphic above, cohort studies are in yellow. They are large observational data sets based on questionnaires. Furthermore they go on for several years. In the case of the NHS, we’re talking about more than 120,000 nurses who were first identified in 1976. The purpose of the original study was to look into things like birth control and pregnancy, but starting in 1980 they expanded the remit to include nutrition. The tool that they used is the Food Frequency Questionnaire (FFQ). FFQs work by sending participants a questionnaire in the mail with questions like the following: "Over the past years, how many salads have you eaten? a) less than one per month, b) one per month, c) one per week, d) one per day, e) more than one per day."

The results of the questionnaire are then filed away somewhere. Years later the "scientists" look up the study participants. Some of them will undoubtedly go on to develop things like cancer, diabetes and Alzheimer’s. Then the scientists ask questions like "Of those who went on to develop cancer, were they more or less likely to say that they ate salads in 1980?" If the answers to the question are deemed interesting by some academic publication, they get published.

There are so many problems with this methodology that one doesn’t even know where to begin. Often the people who developed the disease hadn’t submitted nutritional data for years or maybe a decade. If someone said they were eating a lot of meat in 1980 and then goes on to develop Alzheimer’s in 1995, does that mean that they were still eating meat between 1990-1995, when presumably they were developing the cancer? No one knows.

The studies themselves are notoriously tough to answer. If someone asked you how many servings of yogurt you ate per week on average over the last year, would you have any idea of the truth? By way of reference, here is a screenshot from that original 1980 FFQ:

There's another problem: foods that include both meats and carbs (think sandwiches, hamburgers, hot dogs, etc) are counted as meat. But is it the meat that's the problem here? Or is it all the other things? Or even the combination of meats and processed carbs that might be the problem, given what we know about the Randle cycle, energy toxicity and so on? Again, there's no way to know from this dataset.

Without knowing anything more you might assume that these methods are inaccurate and you’d be right. But the question of accuracy has been studied. We have extensive evidence that these methods simply don’t work. The best work on this subject has been done by Prof. John Ioannidis of Stanford, who has written a paper with the fabulous title “Implausible Results in Human Nutrition Research”. He points out that all of these papers tend to have the same flaws - they’re using FFQs to show very weak correlations to generate clickbait headlines. The headlines become clickbait precisely because they're improbable, therefore incentivising what I can only call pseudoscience.

OK, so we’ve talked about the problems with FFQs, what about the next problem he cites, weak correlations? In order to understand this problem, we have to understand two things: a) the difference between relative risk and absolute risk, and b) the fact that correlation can happen at random and must never be confused with causation.

The best example to understand this is smoking and lung cancer. A smoker has something like a 10% of developing lung cancer, depending on the study and sample size you’re looking at. But the risk to a non-smoker is not zero; in some studies it may be as high as 1.3%. In relative terms, a smoker is eight times more likely - or 800% more likely - to develop lung cancer than a non-smoker.

In that example the relative risk is high enough - eight - to infer causation. In other words the difference between the two populations being studied is so great that we can infer - together with a mechanistic idea of what’s going on - that it’s the smoking that’s causing the problem.

But what about in cases where the the relative risk is much lower? In the study cited by the CNN article, the relative risk of eating red meat and processed meat was not eight, it was 1.13-1.16 depending on the outcome they were looking at. To use the previous example, if the absolute risk to a non-smoker was 1.3% and the risk to a smoker was 1.5% would we be concerned about smoking? Or would we say that maybe there’s some other difference between the two populations that could explain such a difference?

Before you answer that remember that all kinds of things show a tight correlation that are clearly not related. There's a fantastic website that looks at what's called "spurious correlation" or the idea that two things that clearly have no relation to one another may appear to be related if one was just looking at a graph. Here's a screenshot of the relationship between air pollution in Manchester, NH and Google searches for "internal bleeding".

So just because two things appear to be correlated - for example meat eating and dementia - does not mean that they are related. The relative risks cited in this study are nowhere near high enough to rule out the possibility that it was just dumb luck giving the illusion of some kind of correlation when in fact there's nothing to see here.

So just because two things appear to be correlated - for example meat eating and dementia - does not mean that they are related. The relative risks cited in this study are nowhere near high enough to rule out the possibility that it was just dumb luck giving the illusion of some kind of correlation when in fact there's nothing to see here.

If that was all that was going on here it would be bad enough, but it's much worse. The bulk of the NHS data was collected in the 1980s, 1990s and early 2000s, when everyone in the US was told to be on a low-fat diet low in red meat in order to improve their health. In that context, who are the people who were going to be eating a lot of red meat and processed meat? Chances are, they were those who didn’t value their own health as much, who were more likely to smoke, more likely to drink, less likely to exercise, less likely to take vitamins and so on. This is known as the "healthy user bias" and it's extremely well understood. This paper argues that the healthy user bias is distorting statistics when it comes to the benefits of the influenza vaccine.

Because of these concerns, people like John Ioannidis argue that we shouldn’t even be publishing data of this kind where the relative risk is less than two. In other words if there isn’t a doubling of risk, there’s nothing to see. Even with a doubling of risk, we need further studies including randomised control trials (RCTs) if we want to posit causation. It's not until we get to those risk ratios of eight or more that we see for example in smoking and lung cancer that we can assume causation.

In this case, the risk doesn’t even meet the threshold we would need in order to give it the least bit of credibility and look further into it. So why the headlines?

In Julius Cesar, Shakespeare famously wrote the following line: “The fault, dear Brutus, is not in our stars but in ourselves.” When I first saw this study (before CNN wrote anything about it) I decided to ignore it. “This is just another meaningless attempt to spin old data into headlines,” I thought. “It’ll fizzle out by itself in a couple of days.”

But unfortunately it did not fizzle out. I see people I know and trust referring to the study and to the headlines without pointing out the obvious and glaring faults.

I have to conclude that the average person doesn’t know enough to click through to the study and to evaluate the relative risk, despite the fact that so many of us in the health space have been talking about these methodological problems for years.

The next time you see a headline urging you to limit red meat or any other food, ask yourself:

- Where’s the data coming from?

- What’s the quality of the evidence?

- Could there be other factors at play?

For me, nutrition science is still in its infancy. Therefore we have to ask another question: "What foods did humans evolve to eat?" And when we answer that question, I think we'll find that red meat is towards the top of the list of foods that should be presumed to be safe for the general population, even in high amounts. Anyone who wants to argue otherwise needs to provide high-quality evidence to the contrary.

Doing another re-analysis of old data - data that was meaningless 40 years ago when it was first being published and is still meaningless now - does not cut it.

🌱 Ready to Find Your Path to Gut Freedom?

Stop trying to solve your Crohn's, Colitis, or IBD alone with conflicting advice. A personalized plan is the fastest way to clarity and relief.

On a free call, you’ll get:

✅ Clarity on your triggers – Identify the dietary and lifestyle factors uniquely impacting you.

✅ A tailored starting point – Get actionable steps to reduce inflammation and calm your gut.

✅ Real answers – Ask anything about your symptoms and healing (no topic is off-limits).

💬 “Working with Sameer gave me a clear path when I felt completely lost. This is the guidance I needed.” – Previous Client

Your personalized plan is a conversation away.